Apple is known for its attention to detail, but with iOS 18 and macOS Sequoia, the company is making a significant leap in accessibility. The latest updates to Voice Control introduce much-needed enhancements that make the feature not just usable, but indispensable for disabled users who rely on voice dictation instead of using the keyboard.

Understanding Voice Control

While Siri enables basic text dictation on Apple devices, severely disabled people often require more comprehensive functionality. They need the ability to control their devices entirely by voice—whether that’s launching apps, dictating, editing text, or navigating their device.

To address these needs, Apple introduced a powerful accessibility tool known as Voice Control. Debuting in 2019 with iOS 13 and macOS Catalina, Voice Control allows users to seamlessly integrate spoken commands and dictation, providing a more robust and versatile hands-free experience.

Five years in the making

For years, users who depend on Voice Control have faced dictation challenges in achieving accurate and grammatically correct text input. While Apple’s Voice Control has been a powerful tool for those with limited mobility, it lacked some essential features, particularly in custom vocabulary management. This gap often resulted in frustration as users had to manually correct capitalisation errors for proper nouns, which disrupted the flow and efficiency of their work.

Persistent advocacy from the accessibility community, including myself, has finally borne fruit. The recent updates in iOS 18 public beta 3 and macOS Sequoia have introduced automatic capitalisation for proper nouns added to custom vocabularies, making the app not just functional, but truly productive.

These may seem like small tweaks, but they have a massive impact on usability and productivity for people with the severest disabilities who can’t access the keyboard.

Key features of the iOS 18 and macOS Sequoia Voice Control update

The latest update finally makes Voice Control a game-changer for users who need it the most. Here’s what’s new:

1. Automatic capitalisation of proper nouns: No more manual corrections—proper nouns added to custom vocabulary are now capitalised automatically when you dictate.

2. Adherence to basic grammar rules: Voice Control now follows basic grammar conventions, making your dictated text more accurate and readable.

3. Increased productivity: These updates allow users to communicate more effectively and efficiently, whether in work, education, or online social life.

The impact on daily life

For many, what seems like a small update is actually a monumental change. Being able to communicate effortlessly and accurately is crucial in today’s fast-paced world. Whether you’re writing an email, composing a document, or participating in online discussions, these new features in Voice Control make these tasks more accessible than ever before.

As someone with limited use of my hands and arms, as well as breathing difficulties affecting my speech, this development has been transformative. Tasks that were once laborious and time-consuming are now streamlined, allowing me to focus on what truly matters—communication, creativity, and connection.

Recognition in the tech community

The significance of this update has not gone unnoticed. Ben Lovejoy from 9to5mac covered this development in a detailed article when I spoke to him last week, highlighting the importance of these changes and how they are paving the way for a more inclusive tech environment. Aaron Zollo, a prominent Apple YouTuber with 1.5 million followers, highlighted the significance of these changes in a video recently, emphasising how they contribute to a more inclusive tech landscape. It’s deeply gratifying to see these advancements acknowledged by a wider audience, validating the years of advocacy and effort I have invested in helping to make these improvements a reality.

Room for improvement

Up until now, Voice Control dictation has been somewhat of a blunt instrument. The app doesn’t learn from recognition errors, leading to compromised accuracy and significant frustration for users—especially those with impaired atypical speech or breathing difficulties. Repeatedly correcting the same errors can be physically taxing and detrimental to productivity.

Currently, it’s unclear whether adding and recording phrases to Voice Control custom vocabulary will alleviate this issue, but we can certainly hope it will. Ideally, I would like to see Voice Control dictation evolve to function more like Dragon Professional the industry leader on Windows computers, which learns from mistakes and continuously improves. This is the level of adaptability and learning that disabled users need from Voice Control.

It remains to be seen what impact Apple Intelligence and AI will have on Voice Control, but I hope that, in time, it will lead to a significantly improved user experience.

Custom vocabulary training is crucial for long-form dictation on devices like the MacBook Pro, where accuracy and personalisation in longer emails and documents can significantly enhance both user experience and productivity.

Currently, however, the public beta of macOS Sequoia lacks the ability to record custom vocabulary—a feature that is already available in the iOS 18 public beta. The absence of this functionality in macOS Sequoia is notable, but hopefully, it’s due to the early stage of the beta cycle. With any luck, this feature will be included in macOS Sequoia before its official release in the autumn.

Conclusion: a new era of accessibility

One might wonder why a seemingly straightforward fix in programming terms took Apple five years to implement, especially after being repeatedly informed of the issue. The likely explanation is that Apple’s developers have been intensely focused on Apple Intelligence, and now that Voice Control fits neatly within this framework, it has finally become a priority.

The recent updates to Apple’s Voice Control in iOS 18 and macOS Sequoia go beyond simply adding new features—they signal the beginning of a new era in accessibility, where technology is truly designed to meet the needs of all users. This is a significant victory for the accessibility community and demonstrates the power of persistent advocacy.

As Apple continues to innovate, it’s clear that accessibility is not just an afterthought, but a central consideration in the development of its products. I am eager to see what new advancements Apple will bring to the world of accessibility in the future.

Worth knowing

I have been testing Voice Control in the public betas of iOS 18 and macOS Sequoia.The final versions of the software will be released to the public in the autumn.

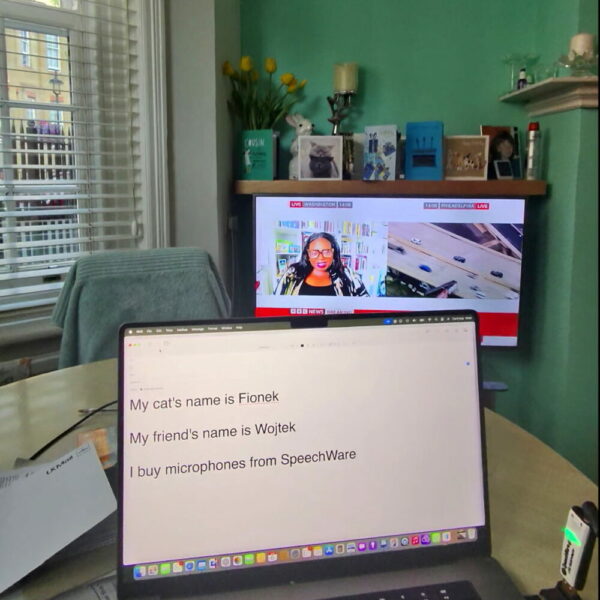

My dictation setup includes Apple Voice Control on an M1 MacBook Pro with the KeyboardMike Plus microphone from SpeechWare.

I shot the video demonstration in this post hands-free witn a voice command to the Ray-Ban Meta smart glasses.