We’re just a few hours away from WWDC 2020 — Apple’s annual Worldwide Developers Conference. This year it is a completely online event due to the COVID-19 pandemic. The virtual keynote is later today where the company will unveil the next major releases of its operating systems, such as iOS 14, watch0S 7 and macOS 10.16, as well as new hardware.

Read on as I recap all the new features and improvements I hope to see at WWDC 2020 to make Apple devices much more accessible to people with severe physical disabilities who have problems interacting with screens, keyboards and trackpads.

iOS 14

iOS 14 will be a prominent announcement, and WWDC is where Apple unveils details of the latest version of iOS to give developers time to prepare their apps before it’s released to the public. There follows several months of beta releases before the final version of iOS 14 is released to the public in September.

Among the features hotly tipped to appear at WWDC are a list view for the iPhone home screen, home screen widgets, and the ability to set third-party web browsers and email apps as defaults.

When it comes to accessibility for people with physical disabilities Apple needs to harness the power of Siri to control more of the iPhone’s functions by voice commands but not only for disabled users but also for anyone who wants to control more of the iPhone with their voice. For example, Siri should be able to end a phone call, “hey Siri end call”, in the same way the voice assistant can initiate a phone call. Similarly, Siri should be able to answer a incoming call with a “Hey Siri answer” command. These are phone function basics that should be able to be controlled by voice. The company needs to introduce the ability to toggle Auto-Answer on and off with a Siri voice command, “hey Siri turn on Auto-Answer”. You can do this with most accessibility features but not this one, strangely. It is more than ironic that a feature designed for people who cannot use their hands to interact with the iPhone screen requires them to do so to switch the feature on.

It would also be useful to use Auto-Answer with Shortcuts. For example, you put your Airpods in your ears and a shortcut automatically turns on the Auto-Answer feature, and vice versa when you remove your Airpods.

It would be really helpful to be able to make the hierarchy of the Accessibility Menu in iOS 14 completely customisable to the user’s own disability. I spend too much time scrolling past the settings for visually impaired people to find the accessibility settings relevant to me.

Announce Messages with Siri will become more useful when other messaging services, such as Facebook Messenger and WhatsApp, become integrated. Apple needs to release a API to developers so they can implement this capability in their apps. Whilst I get it that Apple has invested a lot in developing Announce Messages with Siri but it needs to extend it for accessibility reasons. It’s simply the right thing to do. Millions of people use Facebook Messenger and WhatsApp on their iOS devices and it is a travesty at the moment we can’t access them by voice in the same way we can iMessage.

watchOS 7

It is tipped as a big year for the Apple Watch, and watchOS 7 is set to bring a variety of improvements, including a native sleep app, new health features, a new Kids mode, and more.

I have had two Apple Watches models now, the Series 3 cellular and the Series 5 Cellular, and I have always felt that Apple has not exploited the full potential of the Watch for accessibility and people with severe physical disabilities. As a device, it has so much potential for health, personal safety, and keeping in touch. It’s time for Apple to think beyond the wheelchair work out feature.

The Watch needs the Auto-Answer accessibility feature added so that incoming phone calls can be answered automatically in the same way they can on the iPhone. The cellular version of the watch is, after all, as much of a phone as the iPhone.

Similarly, the cellular version of the Watch needs smarter Siri, with the ability to answer and end calls with a hey Siri voice command.

The Watch does not currently support the Announce Messages with Siri feature and it should. At the moment if you receive a text message on your Watch you need to physically interact with the screen and this is not possible for everyone.

Whilst the Apple Watch has become a fitness and health focused device over the years and excelled at this, hopefully, Apple will see that, intrinsically linked to this, is accessibility for everyone.

macOS 10.16

The are very few concrete rumours about what will be included in macOS 10.16 which will be a follow-up to macOS Catalina 10.15. One of the only strong rumours is that Apple will improve the Messages app and bring it more in line with the Messages app in iOS.

As for accessibility and Mac computers, it is not clear if there is any new hardware on the cards but if there is something I have been waiting a long time for is for Apple to bring Face ID to the Mac.

Windows Hello computers have had hands-free facial recognition login for several years and, strangely, whilst Apple has brought Face ID to the iPhone and the iPad, it is yet to bring the technology to the Mac. It would be great to think the company is working on new high-quality cameras that can be used to offer FaceID login authentication on its next MacBook, which will allow us to sit down and get to work right away hands-free. It would also be a massive boost for people who find it difficult to interact with their Mac computer keyboard and trackpad with their hands because of physical disability, such as having to type in passcodes to login.

I’ve seen Windows Hello in action on a Microsoft Surface Pro laptop and it really is a game changer for accessibility. I dream of the day when I can ride up to my MacBook Pro in my wheelchair, the screen will unlock and I will be logged in automatically as I look at it, and Voice Control will take over and very accurately navigate as I request, and dictate messages and emails as I speak, without the need to touch the keyboard or trackpad.

Voice Control

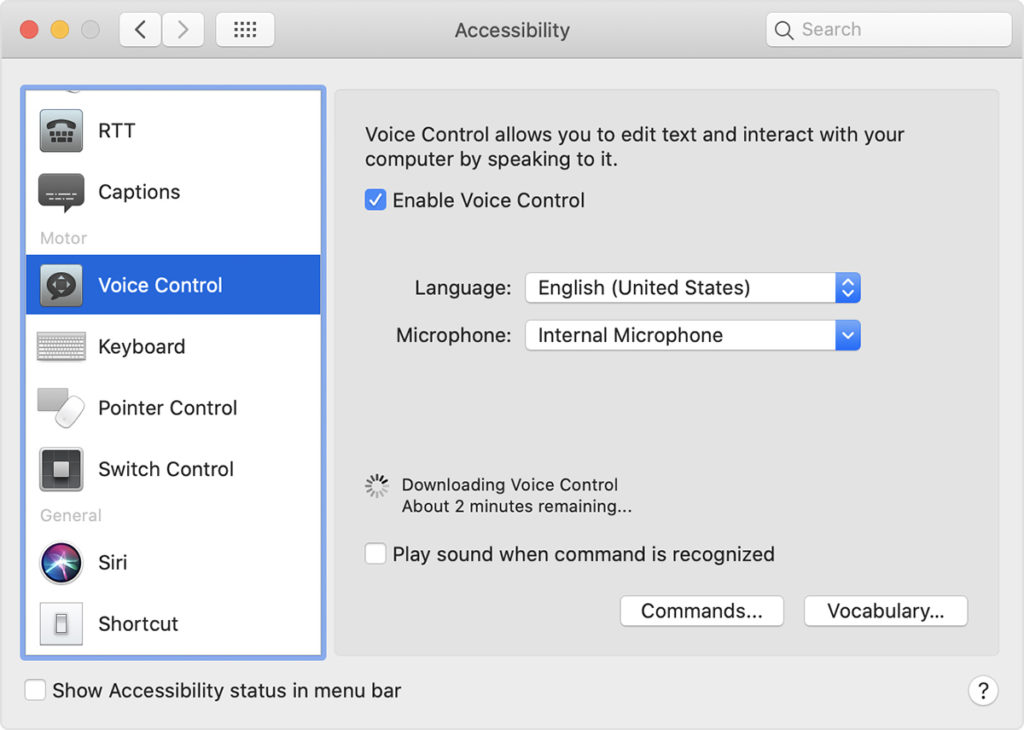

Voice Control, Apple’s speech to text application was the standout accessibility feature at WWDC last year. It is now baked into Apple devices and offers physically disabled people, and anyone who owns a Mac computer, iPhone or iPad the ability to precisely control, dictate and navigate by voice commands alone.

However, as I have found over the past year, it needs improving in a number of key ways if it is to become the productive tool physically disabled people have a right to expect.

Apple needs to fix a serious bug that mangles dictated text in the main Google search text box used by millions of people everyday to acquire knowledge. There are a number of other text boxes on the internet where Voice Control dictation does not perform accurately.

The application launched with support for US English only when it comes to operating with the more advanced Siri speech-to-text engine. Apple needs to add other languages, including UK English if Voice Control is going to become useful for me as someone living in the UK.

More advanced support and settings for microphones would improve the accuracy of dictation as would the ability to train words when adding them to your custom vocabulary so the same dictation errors do not keep repeating.

Roundup

These are important features and improvements I hope to see at WWDC rather than will see. I have spent the last 12 months feeding back to Apple on the need for these features so now is the time to find out if the company has been listening.

If some of them can be implemented in iOS 14, watchOS 7, and macOS 10.16, they will go a long way to make the iPhone, Watch and Mac computer truly accessible to people who have problems handling the iPhone and touching the screen, interacting with the Watch face, and touching the Mac keyboard and trackpad because of severe physical disability.

These accessibility improvements, and making it easier to control Apple devices with your voice, should also be popular with a lot of people generally.