Last August, I published an article on The Register, drawing attention to a pressing issue affecting 250 million individuals globally: the inadequacy of voice recognition technology for those with non-standard speech.

This is a significant challenge for individuals with conditions such as cerebral palsy, amyotrophic lateral sclerosis (ALS), stroke survivors, and those with muscular dystrophy like myself. Despite advancements in AI and voice recognition, these technologies often fail to support those who need them most.

In my Register piece, I outlined several critical issues with current voice recognition systems:

Limited flexibility

Many voice recognition systems are narrowly designed, failing to accommodate the varied speech patterns of users with non-standard speech. This has left many potential beneficiaries unable to utilise these technologies effectively.

Lack of personalisation

Existing systems often cannot integrate custom vocabularies or handle complex words, a crucial feature for users whose speech differs significantly from typical patterns. This shortcoming frequently results in ineffective and frustrating voice commands.

Technological exclusion

Despite the promise of AI, individuals with speech impairments have often been excluded from technological advancements. This oversight has perpetuated a digital divide, leaving a large portion of the population without access to transformative tools.

As a passionate advocate for more inclusive voice technology, I have continually pushed for improvements that make voice recognition accessible to everyone, regardless of their speech patterns. My advocacy included direct appeals to tech giants like Apple, encouraging them to lead in creating more inclusive technology.

Earlier this month, this advocacy bore fruit. Apple announced substantial voice accessibility enhancements that address the issues I had highlighted. Here’s how they plan to make a difference:

Recognition of atypical speech

Apple’s new “Recognition of Atypical Speech” feature utilises on-device AI to understand the unique speech patterns of users with acquired or progressive conditions. This revolutionary technology aims to accurately capture and respond to the voices of 250 million people worldwide with non-standard speech. The use of on-device AI ensures both privacy and efficiency, making this feature secure and effective.

Advanced Voice Control

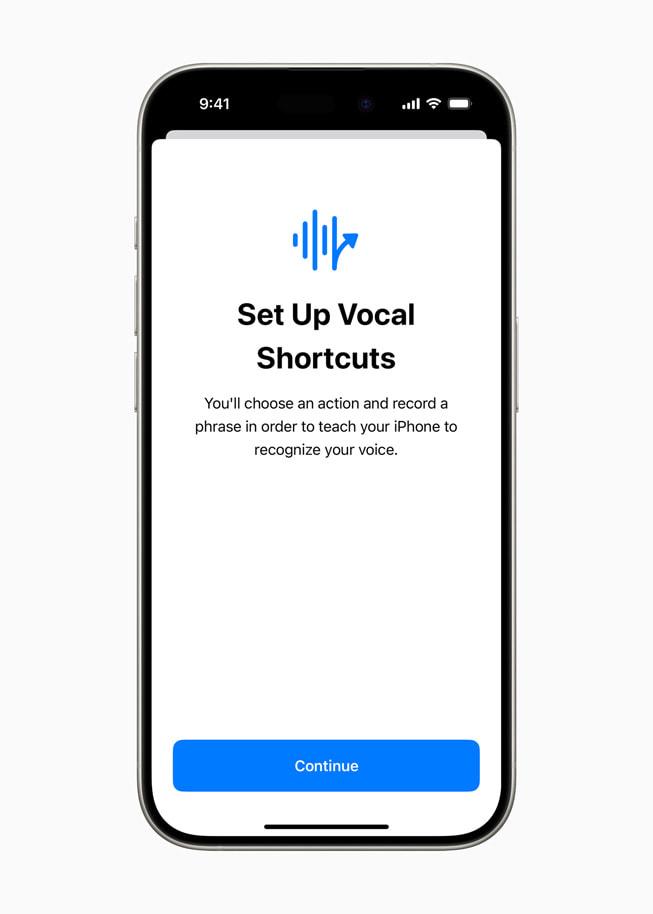

Voice Control has been significantly upgraded to support custom vocabularies and complex words. This is a development I have championed since Voice Control’s introduction five years ago. Customisable vocabularies enable users to communicate effectively with their devices, regardless of the specific terminology they use. This enhancement is a game-changer for those with unique or complex speech patterns.

A Leap toward inclusivity

These voice enhancements represent a major advancement in making technology more inclusive. Apple’s dedication to addressing the needs of users with non-standard speech is commendable, showcasing the power of advocacy and the impact of listening to user feedback. As someone deeply involved in highlighting these issues, I am thrilled to see these changes realised.

Additional developments

Apple has also introduced several other accessibility improvements set to be available on their devices later this year, including:

• Eye Tracking: This new feature allows iPhone and iPad users with physical disabilities to control their devices with their gaze, using a system similar to Vision Pro.

• For users who are blind or have low vision, VoiceOver will include new voices, a flexible Voice Rotor, custom volume control, and the ability to customise VoiceOver keyboard shortcuts on Mac.

• Magnifier will offer a new Reader Mode and the option to easily launch Detection Mode with the Action button.

Conclusion

These new features highlight the importance of advocacy and the impact of persistent efforts. Having been a vocal proponent of these issues, it is gratifying to see meaningful changes come to fruition. Apple’s voice accessibility improvements are not just a victory for those with non-standard speech but also for inclusivity and technological innovation.

As we celebrate these advancements, it is crucial to keep pushing for further improvements to ensure that technology serves everyone. For now, I am celebrating this significant milestone, thrilled that Apple has listened and taken essential steps to make voice recognition technology accessible to all.

These new features will become available with the iOS 18 public beta, likely in early July, and will be fully released in the autumn with the full rollout of iOS 18. It remains to be seen if some AI-driven features will be limited to newer Apple devices.

Read more about Apple’s accessibility announcements here: Apple Newsroom.